Welcome to Teammately

Hello, welcome to Teammately Docs!

The founder Tom is writing this article as our greeting message!

This is a website of technical docs that describes in detail how you could use Teammately, but before going into details, I'd like to first share our product philosophy and concept. I believe it's eventually easier for you to understand what you can achieve with us.

Teammately is a tool that redefines how you, as a Human AI-Engineer, builds AI.

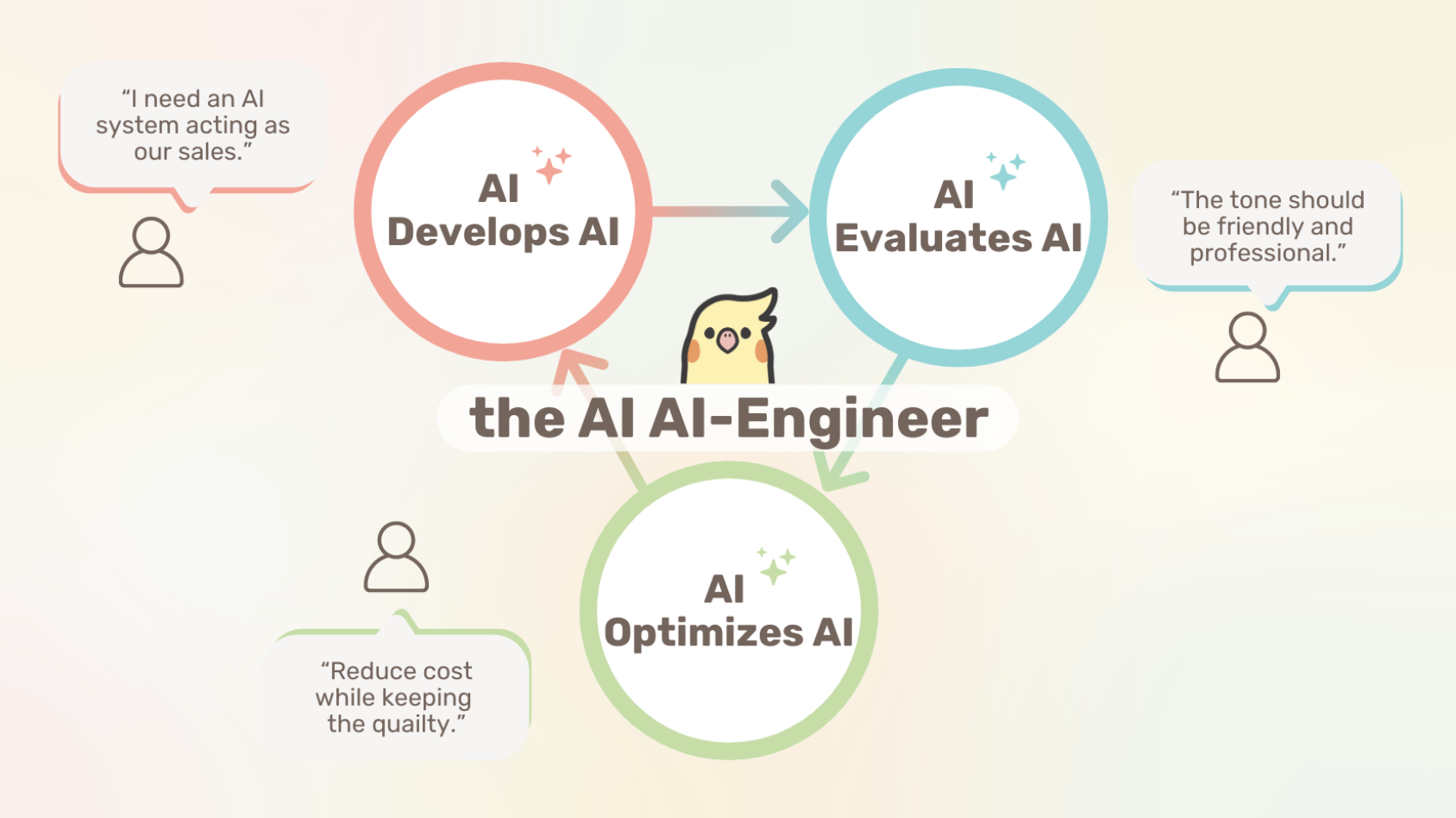

Teammately is an Agentic AI for AI development process, designed to enable Human AI-Engineers to focus on more creative and productive missions in AI development. We're calling this Agentic system as "AI AI-Engineer".

How our AI AI-Engineer is designed

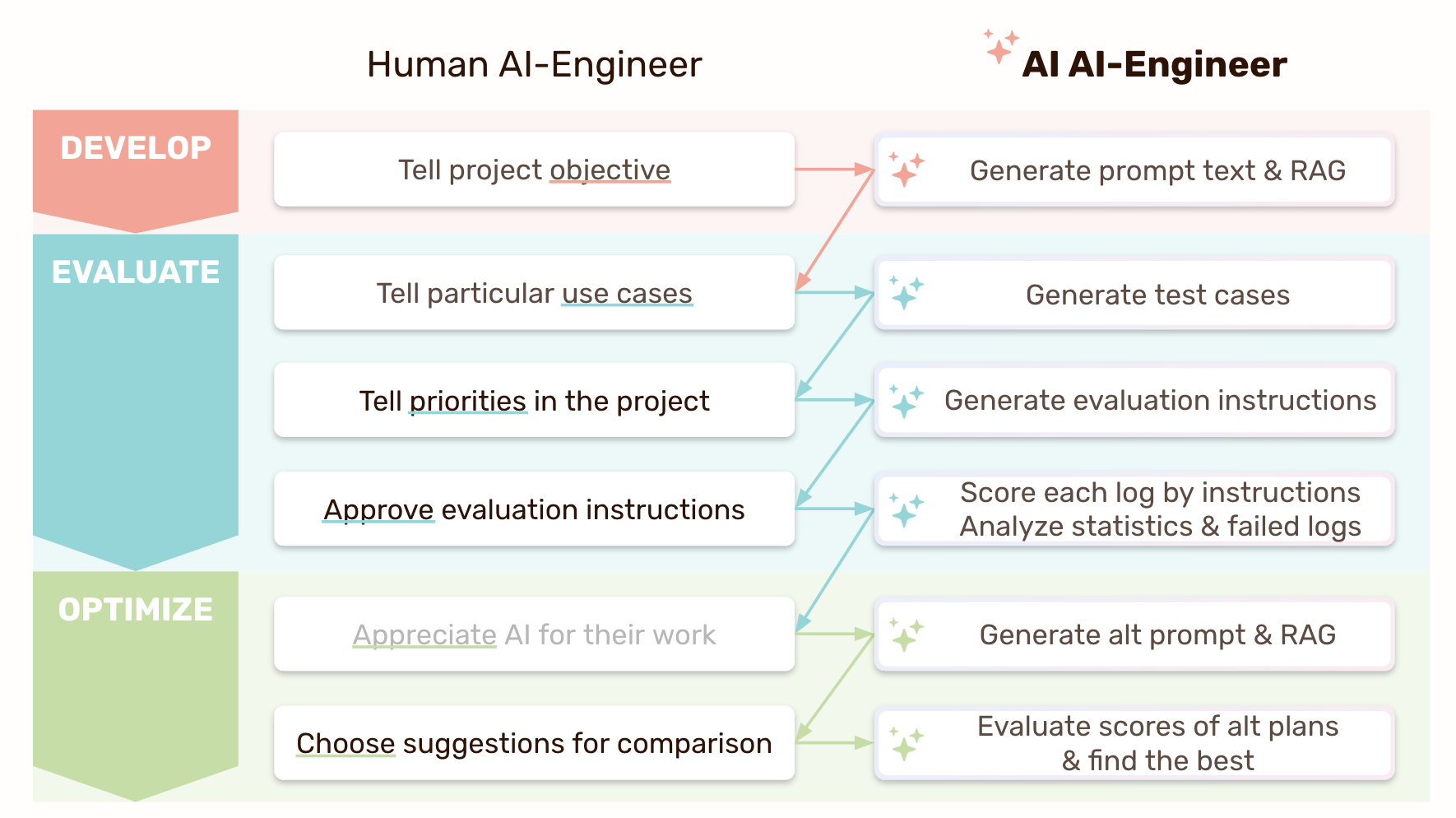

Our AI AI-Engineer is designed to follow the best practice of Human LLM DevOps, including:

- Development

- Prompt Engineering techniques, including Chain-of-Thought with Reasoning Capabilities, Multi-step generation, and Instruction Format

- Knowledge Tuning techniques, from Dynamic Chunking, Embedding Optimization & Multi-vector indexing, HNSW with query filters, Reranking, to knowledge "Operations" like GraphRAG, Missing knowledge retrieval mechanism, Dynamic mix of publicly available knowledge sources, and Interleaved generation

- Evaluation

- Optimization

In general, this is an iteration process of AI system that Human AI-Engineers have long been worked on.

But in order to align our AI AI-Engineers' work with the success of developers and product managers, we enable Human AI-Engineers to set "Project Objectives, Priorities and Use Cases" at the beginning and key checkpoints. This setup allows the AI AI-Engineers to chase these goals and iteratively refine their approach towards that.

You may think it's a good "productivity" tool for Human AI-Engineer, but it's not only that; with AI that can work for 24/7 to "deep-iterate" towards your goals, it can try numerous patterns like brute-force to find the optimal one, at the level that human manual iteration cannot handle in their workload capacity.

And we're proposing a new era of AI engineering; by letting AI AI-Engineer to handle highly-technical but heavy-work in tuning your AI, Human AI-Engineers on Teammately can now shift their focus on planning how to better adapt AI capabilities into existing products, looking how to better align AI with broader human preferences and requirements, or finding an even more creative AI Agent use cases.

This is the "Next Best Practice" in AI engineering we are proposing to the community with Teammately.

Human AI-Engineers are no longer needed? What Human AI-Engineer should do next?

When we have conversations with developers (like at The AI Conference in SF where we first announced the birth of Teammately), some of the developers or business managers asked me "are Human AI-Engineers no longer needed?".

A valid question, and I always say, "NO, NEVER!"

I loudly say, "the role of Human AI-Engineer rather gets even bigger with AI capabilities".

How?

I concern the Trough of Disillusionment to come in AI again. With the inflated expectation for Chat-style LLM AI, so many business leaders and developers are eager to build their own version of Chat AI. But then many teams should find disappointment in a simple ChatGPT wrapper that they're not highly demanded in human working style, and some may rush to judge that AI is not such a highly rated magic...

But fundamentally, with the LLM/AI capability that can flexibly self-judge and self-reason if we have practical tunings, there is potential for LLM/AI to process a wide range of heavy work currently done by humans. (Like what we designed on Teammately for AI-Engineering tasks!)

The role of the future Human AI-Engineer, we believe, should rather look like 1) evangelists of AI capabilities with grounded and productive use case proposals, 2) researchers to try idea and prove them create values, and 3) make sure the AI practically works with proven verifiable records in order to truly make them production, rather than prototype.

With proper tools and platforms to enable them, rather than falling into the bottomless swamp of AI iteration, AI (and Human AI Engineers like you) can truly build a new era.

AI AI-Engineers alone cannot build AI, and cannot initiate a new AI project. Human AI-Engineers have a pivotal role to be a bridge and bring the new tech to the real world!

Example Demo

Let's take a look at some of the features on our GUI product to know how Human AI-Engineers can let AI AI-Engineers handle their jobs.

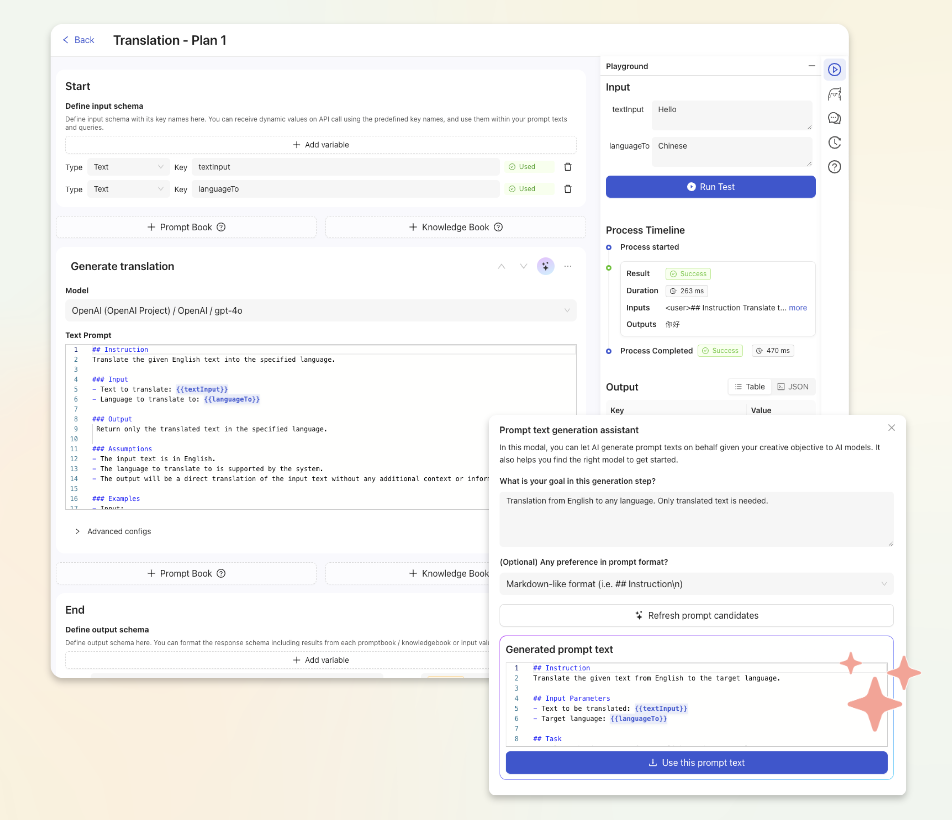

[Develop] Let AI Draft AI Architecture

Prompt engineering is comparatively new but yet-solid tech stacks. Most of the highly technical prompting methods are still controversial and continuously researched, where it's even more challenging to build from scratch.

We highly recommend to test various patterns with Teammately, but to begin with, our AI drafts the initial version of prompt text based on your project objectives and requirements, that serves as a starting point for further refinement.

Here, you can give the first objective of your project, then our AI drafts the initial prompt text to get started.

[Evaluate] Let AI Evaluate AI based on your Objectives

The AI model (prompt + knowledge + pretrained model configs) you built in Develop is "basically" workable as an API and your users can start interacting with that.

However, in order to have your AI confident and workable at production level, our AI offers you to simulate AI responses with diverse potential user inputs, and automatically evaluates whether it's actually safe enough.

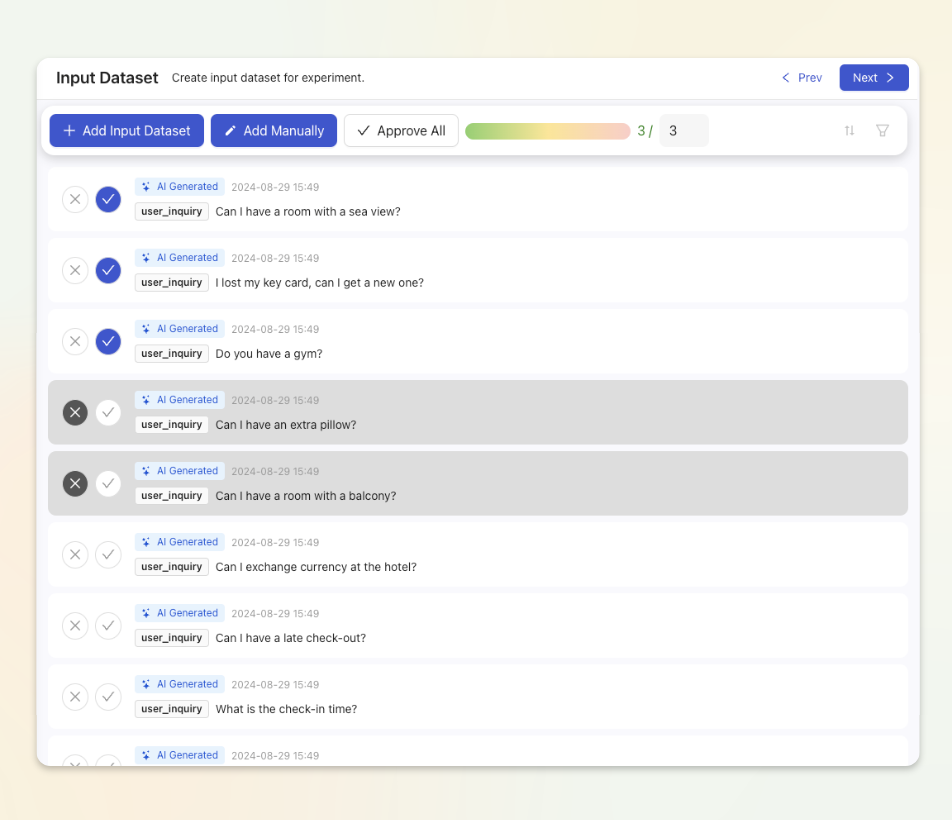

Test case synthesizer

Preparing comprehensive datasets for testing AI has been a time-consuming and challenging task for AI Ops team. This process often required extensive manual effort to ensure a balanced representation of both common and rare scenarios.

But on Teammately, our AI assists in preparing test datasets by synthesizing potential (user) input.

Once you give particular use cases that your AI model is intended, then our AI synthesizes potential input cases with a fair diversity in topics.

AI-handled evaluations

Evaluation is fundamental to building AI, but the practice of evaluation is challenging.

In one of ML training practices like RLHF, human auditors are in the loop to evaluate the AI output, which we believe still necessary and a better practice for foundational model buildings if resource allows.

However, in a requirements to build multiple tailored AI models in a time-constrained environment to catch up the needs of users and market, it's a challenging task. That's why, we see many custom AI models/applications going public without enough verification.

To heal this faults, there are several open-source projects and trials in the LLM AI building community to use LLM AI to judge the output by LLM, which is already giving credits that it works almost at the human level if it's properly instructed.

We beg to this approach - however, those open-source projects cover universal necessities like privacy and bias.

We believe these metrics are fundamental but not enough to say AI fully aligns with the developers & end users. Each AI have specific objectives and use cases, thus they should have different KPIs.

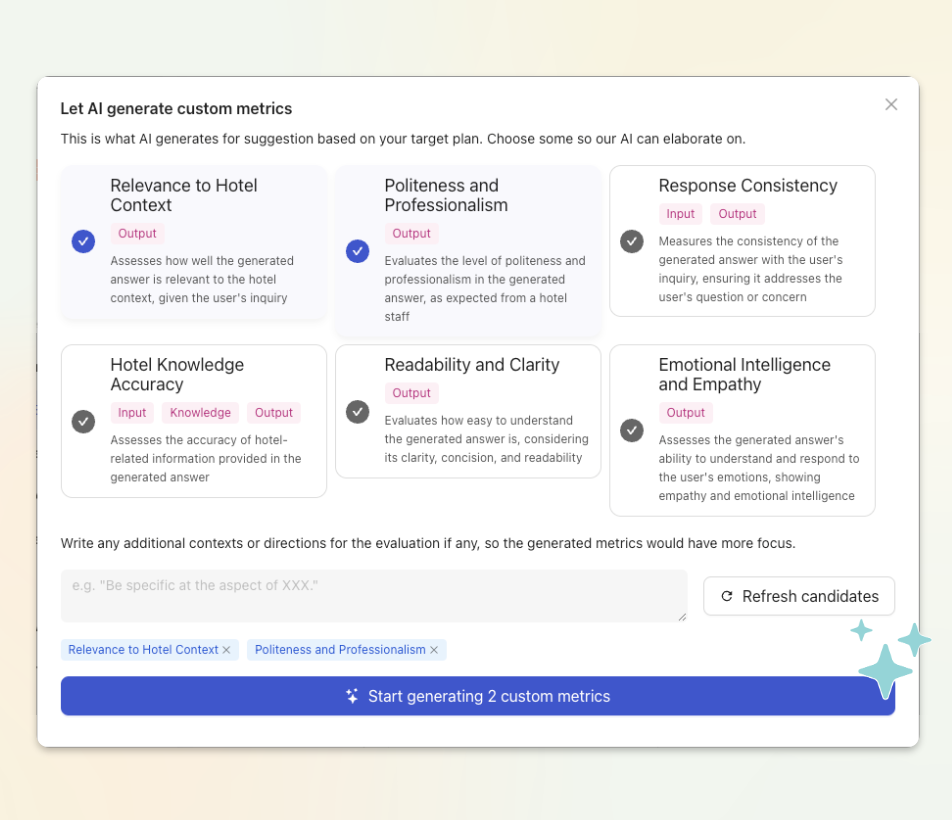

Based on that, we've developed our AI Agent that drafts custom project-specific evaluation instructions and compiles them as a metric evaluator.

It's yet a prompt text, but similar to traditional instructions to human labeling/auditing teams. The compiled evaluator that's then consumed by the another AI evaluator would score the generated output with 2 or 5 grades, with proper reasoning steps.

With the cycle that AI judges the AI output based on AI generated metrics, Teammately tries to make human-safe AI accessible to you.

[Optimize] Let AI Analyze AI Defects and Conduct Iteration Autonomously

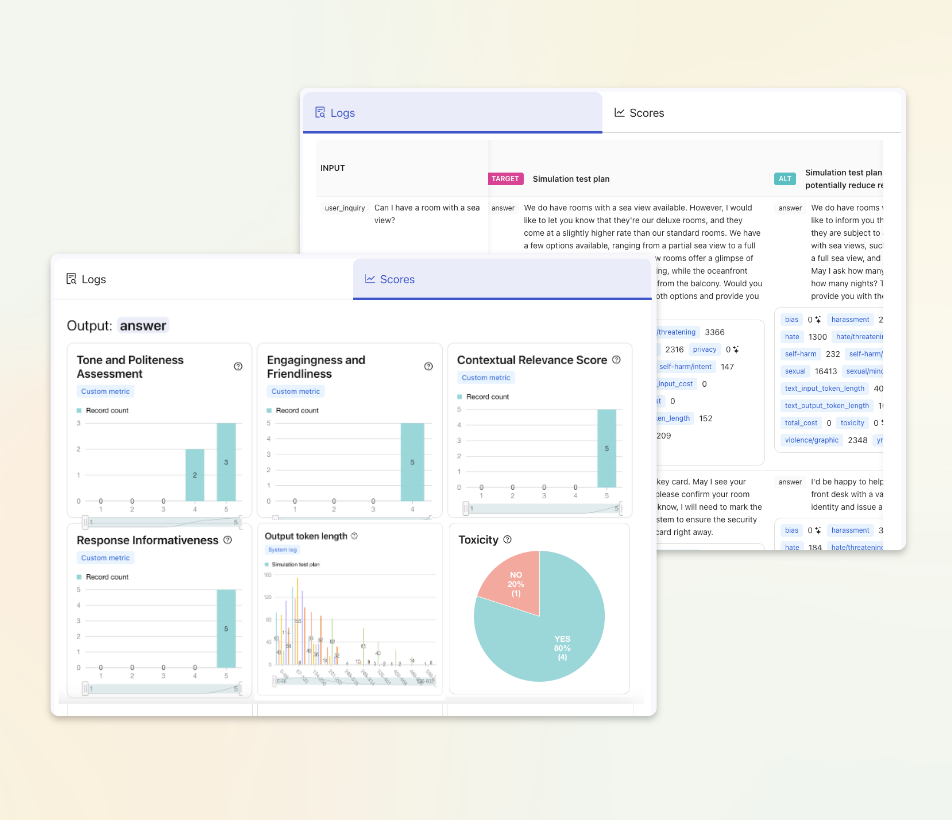

After log-level evaluations in Evaluate, we need a big picture on the AI defects, and we need to take some steps to solve them.

Here, we've built another AI Agent that works as an AI data scientist to gain insights from the evaluation results.

It cooperatively works with the AI Evaluator to deep dive, including segment/dimension based analysis, to grasp the trend.

Following the analysis, our AI compiles an analytical report that includes a problem narrative and key takeaways, which serve as the foundation for planning alternative approaches.

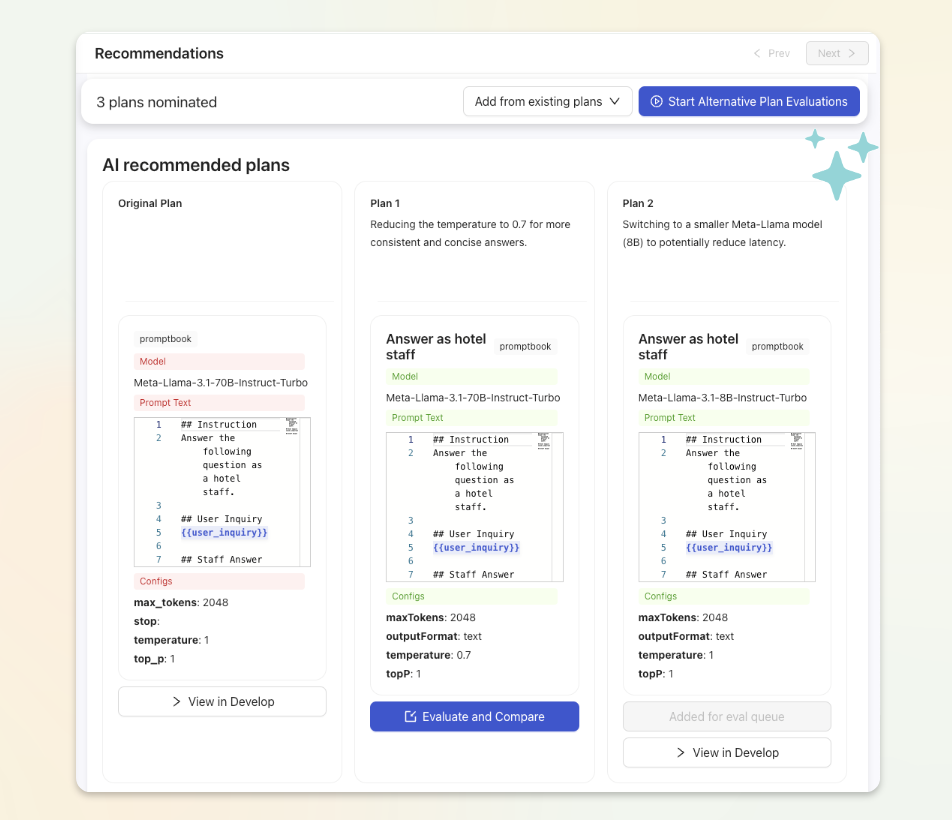

Based on the problem narratives and takeaways from the AI data scientist, another AI generates a potential solutions from available Develop options, from model switches/configs updates, to prompt tuning and knowledge (RAG) upgrades.

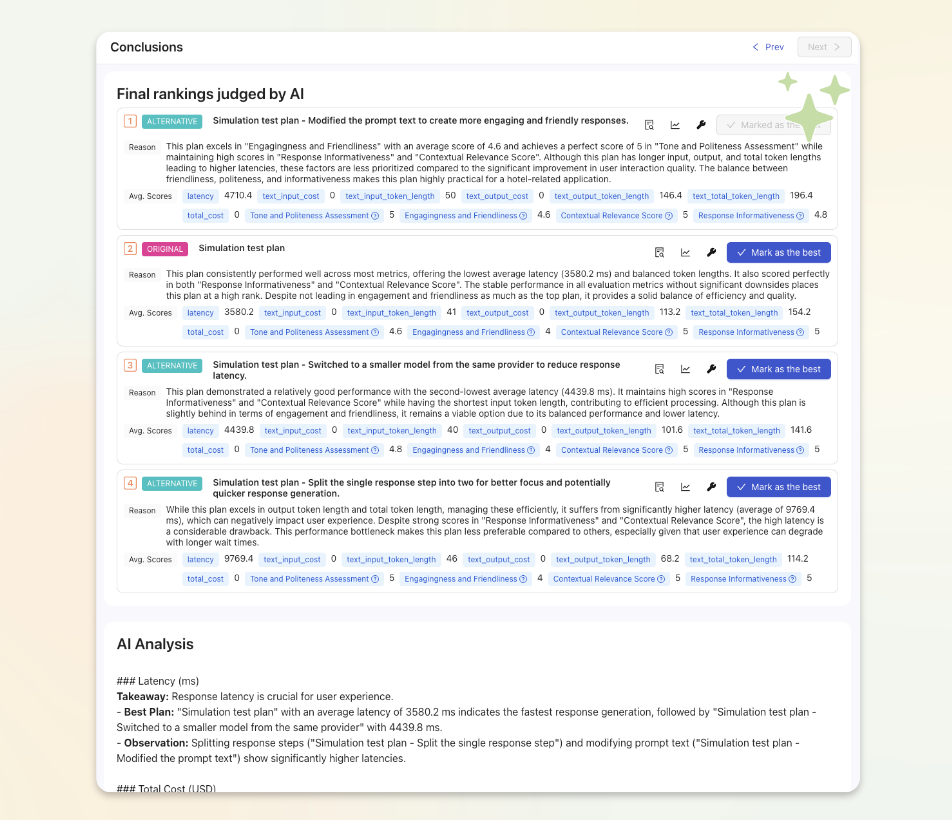

For the proposed alternative AI architectures, it exercises the simulation-evaluation-analysis loop again under the same test dataset and eval metrics to compare the quality-performance.

After reviewing scores and trends of several architectures, our AI finds the optimal architecture that best aligns with the project objective.

These are typical works of how our AI builds and iterates your AI, which is already available for you as a beta version!

Our vision & milestone

Teammately is generally the tool for developers - we require basic knowledge of AI, LLM and relevant software engineering (like API) in order to build and embed the capabilities into the real world product.

But we envision to build an even more trustable tool to enable AI builders to achieve "Human-safe & Human-aligned AI" with Teammately. The following area is the primary topics we're actively working on.

- Inclusive AI Development

- Auditable / Verifiable AI

- Better visibility on AI alignment

- Improving objective-oriented knowledge tuning capabilities

We're also continuously elaborating our documentations and contents on how the future Human AI-Engineer can build a better value, in areas like AI objective setting, how to build a better AI evaluation metric, or how to define AI alignment and quantifies that, as well as extensive AI use cases we propose with the power of Teammately.

For more updates, subscribe to our newsletter, or join discussions with us!

Quick Q&A

- Q: Is this an open-source project?

- A: No, we judged that the quality and safe AI iteration can be well delivered as a managed service. If you need to know more about the technical stack or code details for transparency, we're opened at most of the part!

- Q: How much do we have to pay?

- A: We're in beta, all features are provided for free at this time. For individual developers, it should be free even after beta period. We may have some hard limit on # of requests & features for free version. (Well, we now cover all the costs for generative AI for your AI iteration. We're very pleased to receive any feedback in exchange!)

- Q: Your icon is cute! What is that? What's the name?

- A: Thank you! It's "Yuzu", a bird called cockatiel! Free swags are available, we can give you when we see offline in SF, talk to us!

- A: Thank you! It's "Yuzu", a bird called cockatiel! Free swags are available, we can give you when we see offline in SF, talk to us!

Next Steps

Recommended next steps: